从杭州返程回厦门,天空实都雅

OpenManus

部分概览

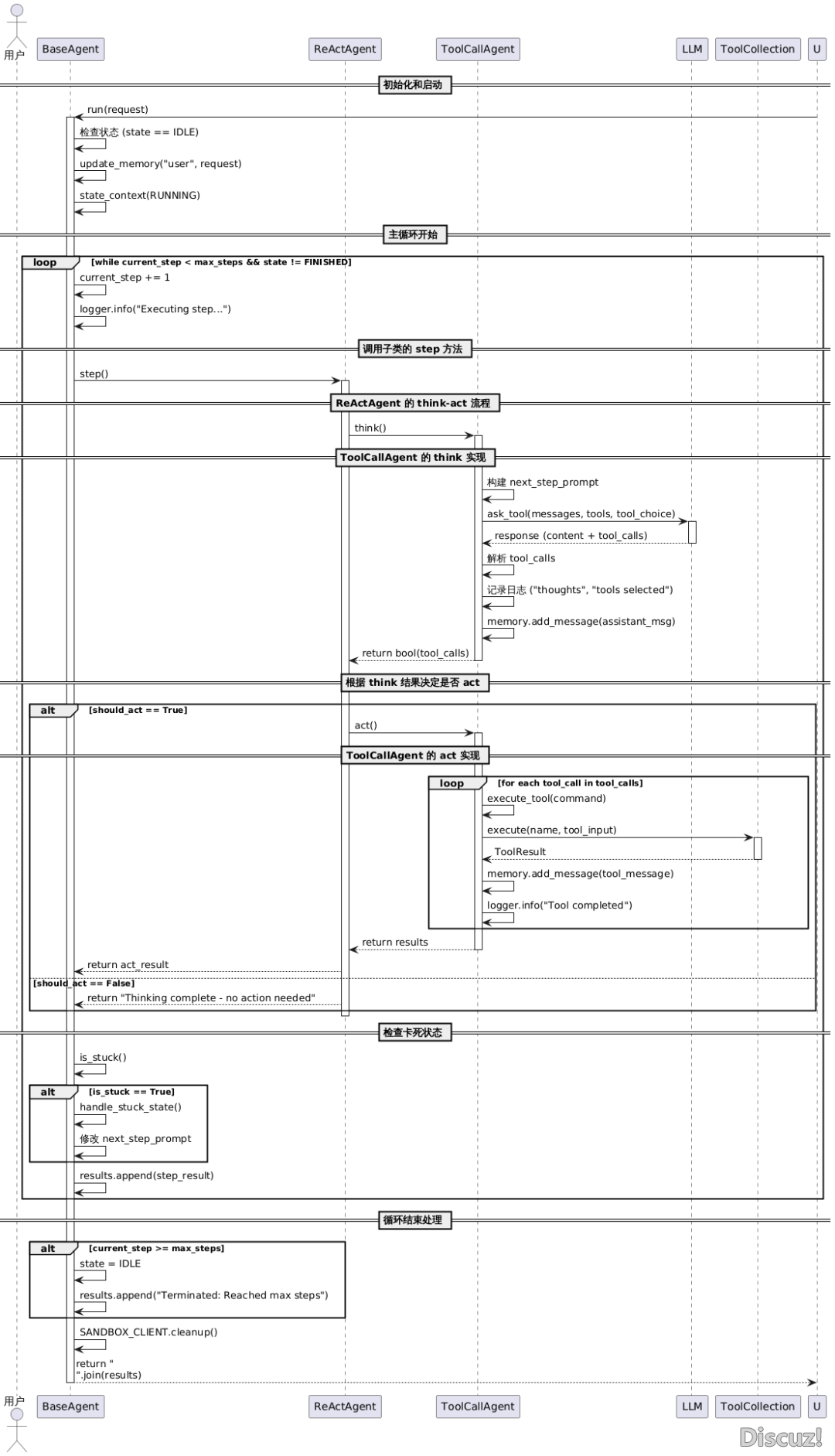

从用户给定一个任务,到它跑起去的大抵历程:

中心组件之间的干系

OpenManus 正在完毕 Agent 的时候,设想了一套松散的承袭干系:

BaseAgent,是统统 Agent 的抽象基类,界说了中心属性战性命周期办理办法,和分歧的交心战根底才气,如 run 函数ReActAgent,完毕了 "深思-举措(ReAct)" 范式,撑持 Agent 正在每步决议计划时既能思考(深思)也能挪用东西(举措)

它自愿让子类完毕了思考(think)战举措(act)的别离也撑持 "只思考没有举措" 的场景

ToolCallAgent,正在 ReActAgent根底 上,增强了对于东西挪用的抽象,撑持多东西挑选、挪用战成果处置Manus,是通用型智能体,撑持当地战长途(MCP)东西的分歧挪用,具备强大的通用任务处置才气

别的,里背东西的中心组件主要有二个:

ToolCollection,分歧办理东西散(BaseTool),撑持批质增加、查找、施行等操纵MCPClients,办理取多个 MCP(Model Context Protocol)效劳器的跟尾

它们之间的干系以下:

Agent 施行过程

能够瞅进去,施行没有是正在单个类或者文献里以线性的方法串连,而是女子之间的配合:

BaseAgent,runReActAgent,stepToolCallAgent,think + actManus,think

Manus 为了能够正在施行差别范例任务时供给最适宜的高低文疑息给 LLM,覆写了 ToolCallAgent 的 think办法 :

async def think(self) -> bool:

"""Process current state and decide next actions with appropriate context."""

ifnot self._initialized:

await self.initialize_mcp_servers()

self._initialized = True

original_prompt = self.next_step_prompt

recent_messages = self.memory.messages[-3:] if self.memory.messages else []

browser_in_use = any(

tc.function.name == BrowserUseTool().name

for msg in recent_messages

if msg.tool_calls

for tc in msg.tool_calls

)

if browser_in_use:

self.next_step_prompt = (

await self.browser_context_helper.format_next_step_prompt()

)

result = await super().think()

# Restore original prompt

self.next_step_prompt = original_prompt

return result

它检测近来 3 条消息中可否使用了 BrowserUseTool,假设使用了,则将静态交流提醒词汇为包罗浏览器形状的版原,如:

目前页里 URL 战题目可用标签页数目页里转动形状(上圆/下圆另有几像艳实质)目前浏览器截图(假设有)

是一个比力智能的提醒词汇劣化办法,只正在需要时获得浏览器形状,制止没必要要的开销,但是能辅佐 LLM进步 决议计划品质战施行结果。

咱们从主轮回开端,瞅瞅它们之间的部分串连细节:

Memory 的设想

OpenManus 将 memory 按 role 分为四种:user、system、assistant、tool,每一种 role 的消息至多只保留 100 条,FIFO,且撑持保存图象(用于浏览器截图)。

因为 Agent 正在设想上的多态性(里背工具),Memory 也是由差别的类去革新的:

BaseAgent 层,初初化时,正在 run 里增加 user消息 ,借担当完毕卡逝世检测ToolCallAgent 层,next_step_prompt 动作 user消息 ,LLM 的照应、Tool 的施行动作 assistant、tool消息 Manus 层,完毕高低文感知,静态改正 next_step_prompt,和处置浏览器截图的图象数据

Memory 借能够辅佐 Agent 完毕卡逝世检测:

def is_stuck(self) -> bool:

"""检测智能体可否陷入轮回"""

if len(self.memory.messages) < 2:

returnFalse

last_message = self.memory.messages[-1]

ifnot last_message.content:

returnFalse

# 统计差异实质的呈现次数

duplicate_count = sum(

1

for msg in reversed(self.memory.messages[:-1])

if msg.role == "assistant"and msg.content == last_message.content

)

return duplicate_count >= self.duplicate_threshold

针对于卡逝世的处置战略:

def handle_stuck_state(self):

"""处置卡逝世"""

stuck_prompt = "Observed duplicate responses. Consider new strategies..."

self.next_step_prompt = f"{stuck_prompt}\n{self.next_step_prompt}"

Prompts

OpenManus 把 prompt 分为了 System Prompt 战 Next Step Prompt:

System Prompt,脚色界说,设定 Agent 的根本品德战举动情势,报告 LLM "您是谁",以此界说了 Agent 的身份、才气战整体举动绳尺Next Step Prompt,举措辅导,报告 LLM "现在该当干甚么",辅导目前步调的具体举动,正在屡屡 think办法 中动作 user消息 收收

具体区分以下表:

| 特征 | SYSTEM_PROMPT | NEXT_STEP_PROMPT | | 消息范例 | System Message | User Message | | 变革频次 | 固态,会话期间稳定 | 静态,每一步可以变革 | | 感化范畴 | 全部脚色界说 | 目前步调辅导 | | 实质重心 | "您是谁" + "您能干甚么" | "现在干甚么" + "如何干" | | 高低文感知 | 凡是牢固 | 可按照形状静态调解 |

如许设想的益处是:

工作别离了,体系提醒词汇担当身份界说,步调提醒词汇担当举措辅导tool活络性下,步调提醒词汇能够按照高低文静态调解(如 Manus 的浏览器感知)可保护性下,二种提醒词汇能够自力改正战劣化

Manus 的 prompts 界说以下:

| 分类 | 实质 | | System Prompt | You are OpenManus, an all-capable AI assistant, aimed at solving any task presented by the user. You have various tools at your disposal that you can call upon to efficiently complete complex requests. Whether it's progra妹妹ing, information retrieval, file processing, web browsing, or human interaction (only for extreme cases), you can handle it all.The initial directory is: {directory} | | Next Step Prompt | Based on user needs, proactively select the most appropriate tool or combination of tools. For complex tasks, you can break down the problem and use different tools step by step to solve it. After using each tool, clearly explain the execution results and suggest the next steps.If you want to stop the interaction at any point, use the terminate tool/function call. |

Qwen-Agent

部分过程

设想上战 OpenManus迥然不同 ,间接瞅残破链路是怎样串连的:

除 Assistant,另有一个简化版的 ReActChat,也完毕了 ReAct 范式:

def _run(self, messages, lang='en', **kwargs):

# 1. 建立 ReAct 提醒模板

text_messages = self._prepend_react_prompt(messages, lang)

response = 'Thought: '

while num_llm_calls_available > 0:

# 2. LLM 天生思考历程

output = self._call_llm(messages=text_messages)

response += output[-1].content

# 3. 剖析 Action 战 Action Input

has_action, action, action_input, thought = self._detect_tool(output)

ifnot has_action:

break

# 4. 施行东西获得 Observation

observation = self._call_tool(action, action_input)

response += f'\nObservation: {observation}\nThought: '

# 5. 革新消息持续拉理

text_messages[-1].content += thought + f'\nAction: {action}...' + observation

但是比拟 Assistant,Assistant 不但具备 计划 → 施行,并且正在某些圆里比 ReActChat 更强大:

分离了 RAG主动化水平下,用户体会更佳取 Memory、多模态、MCP 等体系散成度更佳

瞅起去便像如许:

用户输出 → [RAG 检索] → LLM 计划 → 东西施行 →后果 调整 →输出

↑_____________反应轮回_____________↑

RAG

Qwen-Agent 有强大的 RAG处置 才气,撑持如下文献:

PDF (.pdf)Word文档 (.docx)PowerPoint (.pptx)文原文献 (.txt)表格文献 (.csv, .tsv, .xlsx, .xls)HTML文献

正在处置过程上,先截至文档预处置:

def call(self, params):

# 1. 文献下载/读与

content = self._read_file(file_path)

# 2. 实质提炼

text_content = self._extract_text(content, file_type)

# 3. 智能分块

chunks = self._split_content(text_content, page_size=500)

# 4. 建立元数据列表

records = []

for i, chunk in enumerate(chunks):

records.append({

'content': chunk,

'metadata': {

'source': file_path,

'chunk_id': i,

'page': page_num

}

})

return records

再从用户本初 query 中提炼枢纽词汇,那一步主要是颠末 prompt 让 LLM 提炼:

请提炼成就中的枢纽词汇,需要中英文均有,能够过多弥补没有正在成就中但是相干的枢纽词汇。

枢纽词汇只管切分为动词汇、名词汇、或者描绘词汇等零丁的词汇,没有要少词汇组。

枢纽词汇以JSON的格局给出,好比:

{"keywords_zh": ["枢纽词汇1", "枢纽词汇2"], "keywords_en": ["keyword 1", "keyword 2"]}

Question: {user_request}

Keywords:

小 tips:

使用特地的 prompt 模板让 LLM 提炼枢纽词汇共时天生华文战英文枢纽词汇弥补相干但是没有正在本成就中的枢纽词汇将少词汇组合成为零丁的辞汇

而后接纳混淆检索战略,简化后的代码以下:

def sort_by_scores(self, query, docs):

chunk_and_score_list = []

# 1. 施行多种搜刮战略

for search_obj in self.search_objs:

scores = search_obj.sort_by_scores(query, docs)

chunk_and_score_list.append(scores)

# 2. 分数融合算法

for i, (doc_id, chunk_id, score) in enumerate(chunk_and_score):

if score == POSITIVE_INFINITY:

final_score = POSITIVE_INFINITY

else:

# 倒数排名减权:1/(rank+60)

final_score += 1 / (i + 1 + 60)

# 3. 从头排序

return sorted(all_scores, key=lambda x: x[2], reverse=True)

再将召回的 chunks 以构造化的方法输出:

def format_knowledge_to_source_and_content(result):

knowledge = []

# 剖析检索成果

for doc in result:

url, snippets = doc['url'], doc['text']

knowledge.append({

'source': f'[文献]({get_basename_from_url(url)})',

'content': '\n\n...\n\n'.join(snippets)

})

return knowledge

天生中英文模板:

# 华文模板

KNOWLEDGE_SNIPPET_ZH = """## 去自 {source} 的实质:

{content}

# 英文模板

KNOWLEDGE_SNIPPET_EN = """## The content from {source}:

{content}

注进到 system prompt 中:

def _prepend_knowledge_prompt(self, messages, lang='en', knowledge=''):

# 1. 获得检索常识

ifnot knowledge:

*_, last = self.mem.run(messages=messages, lang=lang)

knowledge = last[-1].content

# 2. 格局化常识片断

knowledge = format_knowledge_to_source_and_content(knowledge)

snippets = []

for k in knowledge:

snippets.append(KNOWLEDGE_SNIPPET[lang].format(

source=k['source'],

content=k['content']

))

# 3. 建立常识提醒

knowledge_prompt = KNOWLEDGE_TEMPLATE[lang].format(

knowledge='\n\n'.join(snippets)

)

# 4. 注进体系消息

if messages and messages[0].role == SYSTEM:

messages[0].content += '\n\n' + knowledge_prompt

else:

messages = [Message(role=SYSTEM, content=knowledge_prompt)] + messages

return messages

撑持超年夜高低文处置

假设 input 太年夜,撑持智能截断,简化版逻辑以下:

def _truncate_input_messages_roughly(messages: List[Message], max_tokens: int):

# 1.维护 体系消息

if messages and messages[0].role == SYSTEM:

sys_msg = messages[0]

available_token = max_tokens - _count_tokens(sys_msg)

else:

available_token = max_tokens

# 2. 从最新消息开端保存

new_messages = []

for i in range(len(messages) - 1, -1, -1):

if messages.role == SYSTEM:

continue

cur_token_cnt = _count_tokens(messages)

if cur_token_cnt <= available_token:

new_messages = [messages] + new_messages

available_token -= cur_token_cnt

else:

# 3. 对于超少消息截至智能截断

if messages.role == USER:

_msg = _truncate_message(messages, max_tokens=available_token)

if _msg:

new_messages = [_msg] + new_messages

break

elif messages.role == FUNCTION:

# 东西成果保存尾尾主要疑息

_msg = _truncate_message(messages, max_tokens=available_token, keep_both_sides=True)

if _msg:

new_messages = [_msg] + new_messages

return new_messages

def _truncate_message(msg: Message, max_tokens: int, keep_both_sides: bool = False):

if isinstance(msg.content, str):

content = tokenizer.truncate(msg.content, max_token=max_tokens, keep_both_sides=keep_both_sides)

else:

text = []

for item in msg.content:

ifnot item.text:

returnNone

text.append(item.text)

text = '\n'.join(text)

content = tokenizer.truncate(text, max_token=max_tokens, keep_both_sides=keep_both_sides)

return Message(role=msg.role, content=content)

RAG 也撑持超年夜高低文:

class ParallelDocQA(Assistant):

"""并止文档问问,撑持超少文档"""

def _parse_and_chunk_files(self, messages):

valid_files = self._get_files(messages)

records = []

for file in valid_files:

# 年夜块朋分,适宜并止处置

_record = self.doc_parse.call(

params={'url': file},

parser_page_size=PARALLEL_CHUNK_SIZE, # 1000 tokens per chunk

max_ref_token=PARALLEL_CHUNK_SIZE

)

records.append(_record)

return records

def _retrieve_according_to_member_responses(self, messages, user_question, member_res):

# 鉴于 member照应 截至两次检索

keygen = GenKeyword(llm=self.llm)

member_res_token_num = count_tokens(member_res)

# 限定枢纽词汇天生输出少度

unuse_member_res = member_res_token_num > MAX_RAG_TOKEN_SIZE # 4500 tokens

query = user_question if unuse_member_res elsef'{user_question}\n\n{member_res}'

# 天生检索枢纽词汇

*_, last = keygen.run([Message(USER, query)])

keyword = last[-1].content

# 施行检索

content = self.function_map['retrieval'].call({

'query': keyword,

'files': valid_files

})

return content

针对于超少对于话的处置:

class DialogueRetrievalAgent(Assistant):

"""This is an agent for super long dialogue."""

def _run(self, messages, lang='en', session_id='', **kwargs):

#处置 超少对于话汗青

new_messages = []

content = []

# 将汗青对于话变换为文档

for msg in messages[:-1]:

if msg.role != SYSTEM:

content.append(f'{msg.role}: {extract_text_from_message(msg, add_upload_info=True)}')

#处置 最新用户消息

text = extract_text_from_message(messages[-1], add_upload_info=False)

if len(text) <= MAX_TRUNCATED_QUERY_LENGTH:

query = text

else:

# 提炼盘问企图

if len(text) <= MAX_TRUNCATED_QUERY_LENGTH * 2:

latent_query = text

else:

latent_query = f'{text[:MAX_TRUNCATED_QUERY_LENGTH]} ... {text[-MAX_TRUNCATED_QUERY_LENGTH:]}'

# 使用LLM提炼中心盘问

*_, last = self._call_llm(

messages=[Message(role=USER, content=EXTRACT_QUERY_TEMPLATE[lang].format(ref_doc=latent_query))]

)

query = last[-1].content

#保管 对于话汗青为文献

content = '\n'.join(content)

file_path = os.path.join(DEFAULT_WORKSPACE, f'dialogue_history_{session_id}_{datetime.datetime.now():%Y%m%d_%H%M%S}.txt')

save_text_to_file(file_path, content)

# 鉴于文献截至RAG检索

return super()._run(messages=[messages[0]] + [Message(role=USER, content=query)], files=[file_path], **kwargs)

最初

OpenManus 接纳模块化架构,包罗三个主要层:Agent 层、Tool 层战 Prompt 层,层级清楚,庞大度一般,重视模块化战可扩大性;比拟之下,Qwen-Agent 更像一个残破的智能体死态体系,它撑持 RAG 和更庞大的多层检索,能颠末逐步拉理撑持从 8K 到 100 万 token 的超年夜高低文处置(Generalizing an LLM from 8k to 1M Context using Qwen-Agent)。

二个框架的 Tool 设想好未几,也没有太庞大,便没有记载了。 |